- InfinitePy Newsletter 🇺🇸

- Posts

- Analyzing Excel Sales Data with Python Pandas and Seaborn - Part I

Analyzing Excel Sales Data with Python Pandas and Seaborn - Part I

In the modern data-driven world, analyzing sales data can offer tremendous insights into business performance, customer behaviour, and potential growth opportunities.

🕒 Estimated reading time: 8 minutes

In the digital age, data is king. Businesses depend on data-driven insights to guide their decisions, predict trends, and optimize operations. One common source of business data is sales records, often stored in Excel spreadsheets. Here we will demonstrate how to leverage the power of Python's Pandas and Seaborn libraries to analyze sales data, visualize trends, and extract insights. We'll cover basic, intermediate, and advanced Python topics, and provide practical examples for each proficiency level.

Now, let's dive into the world of analyzing Excel data with some practical examples that are also available on Google Colab here 👨🔬.

If you're interested in learning more about working with CSV files using Python's Pandas library, please refer to one of our earlier articles here.

Setting Up the Environment

To get started, ensure you have the necessary libraries installed. If you haven't already, install Pandas and Seaborn by running:

# Import the necessary libraries import pandas as pd # pandas is used for data manipulation and analysis import seaborn as sns # seaborn is used for data visualization

First, let's import the required libraries:

# Import necessary libraries for data analysis and visualization # Regular expressions library for string matching and manipulation import re # For fetching data from the internet from urllib.request import urlretrieve # pandas is used for data manipulation and analysis import pandas as pd # seaborn is used for data visualization import seaborn as sns # matplotlib.pyplot is used for creating static, animated, and interactive visualizations import matplotlib.pyplot as plt

Downloading and Loading Data

Let's begin downloading the data files from the given URLs and saving them locally.

# Download the customers data and save it as 'customers.xlsx' urlretrieve("https://infinitepy.s3.amazonaws.com/samples/24c4e0bb-3603-4848-b051-8b68a467783d/customers1000.xlsx", "customers.xlsx") # Download the orders data and save it as 'orders.xlsx' urlretrieve("https://infinitepy.s3.amazonaws.com/samples/24c4e0bb-3603-4848-b051-8b68a467783d/orders1000.xlsx", "orders.xlsx")

Loading the data into pandas DataFrames.

# Load the 'customers.xlsx' file into a DataFrame called customers_df customers_df = pd.read_excel('customers.xlsx') # Load the 'orders.xlsx' file into a DataFrame called orders_df orders_df = pd.read_excel('orders.xlsx')

Print the first 5 rows of the customers and orders DataFrame

In our sample dataset, the customers DataFrame includes the following columns:

CustomerID

Name

Email

Address and

Phone

The orders DataFrame contains these columns:

OrderID

OrderDate

CustomerID

Product

Quantity and

Price.

The following lines of code calls the 'head' method on the 'customers_df' and orders_df DataFrame. The 'head' method returns the first 5 rows of the DataFrame by default. This is often used to quickly inspect the first few records to understand the structure and content of the DataFrame.

# Print the first 5 rows of the customers DataFrame customers_df.head()

First 5 rows of the customers DataFrame.

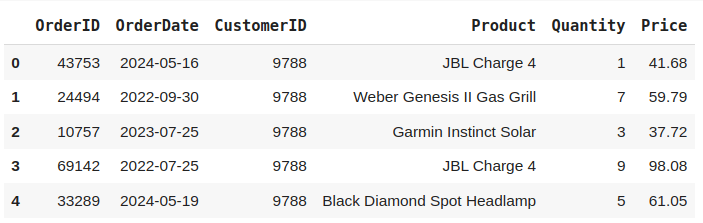

Print the first 5 rows of the orders DataFrame.

# Print the first 5 rows of the orders DataFrame orders_df.head()

First 5 rows of the orders DataFrame.

Getting to Know Your Data

At a basic level, exploring the structure and summary of the data helps in understanding its content.

# The following line calls the 'info()' method on 'customers_df'. # This method is used to get a concise summary of a DataFrame. customers_df.info()

Running the code above will produce the following output.

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1000 entries, 0 to 999

Data columns (total 5 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 CustomerID 1000 non-null int64

1 Name 1000 non-null object

2 Email 1000 non-null object

3 Address 1000 non-null object

4 Phone 1000 non-null object

dtypes: int64(1), object(4)

memory usage: 39.2+ KBThe 'info()' method provides a summary of the DataFrame, including:

The range index (like DataFrame row count);

The columns and their data types;

The non-null counts (how many non-null values are present in each column);

Memory usage of the DataFrame.

This information is incredibly useful for understanding the structure and integrity of your data, especially when dealing with missing values, data cleaning, and ensuring that datasets are loaded as expected.

# The following line calls the 'info()' method on 'orders_df'. # This method is used to get a concise summary of a DataFrame. orders_df.info()

Running the code above will produce the following output.

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 5507 entries, 0 to 5506

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 OrderID 5507 non-null int64

1 OrderDate 5507 non-null datetime64[ns]

2 CustomerID 5507 non-null int64

3 Product 5507 non-null object

4 Quantity 5507 non-null int64

5 Price 5507 non-null float64

dtypes: datetime64[ns](1), float64(1), int64(3), object(1)

memory usage: 258.3+ KBChecking for missing values

# Checking for missing values customers_df.isnull().sum()

Running the code above will produce the following output.

CustomerID 0

Name 0

Email 0

Address 0

Phone 0

dtype: int64# Checking for missing values orders_df.isnull().sum()

Running the code above will produce the following output.

OrderID 0

OrderDate 0

CustomerID 0

Product 0

Quantity 0

Price 0

dtype: int64The '.isnull()' method is called on the DataFrame where it returns a DataFrame of the same shape as 'customers_df', but with boolean values:

True where the elements in the original DataFrame are NaN (Not a Number),

and False where they are not.

'.sum()' is called on the DataFrame returned by '.isnull()' and this sums up the True values (which are interpreted as 1) along the columns (default axis). So '.sum()' will return a Series with column names as the index and the count of NaN values in each column as the values. The result is a Series that tells us how many missing values (NaNs) there are in each column of the 'customers_df' DataFrame.

A Pandas Series is a one-dimensional array-like object that can hold various types of data, similar to a column in an Excel sheet or a SQL table. It is part of the Pandas library, which is used extensively for data manipulation and analysis in Python.

Check for duplicate rows

The following line of code checks for duplicate rows in the DataFrame.

'duplicated()' is a method that returns a Series indicating whether each row is a duplicate. A duplicate row means that it has the same data as a row that has already been encountered.

'sum()' is then called on the resulting Series to count the number of True values.

Each True indicates a duplicate row, so 'sum()' gives the total count of duplicates. The result is the total number of duplicate rows in the DataFrames.

# Check for duplicate rows customers_df.duplicated().sum()

Running the code above will produce the following output.

0# Check for duplicate rows orders_df.duplicated().sum()

Running the code above will produce the following output.

0Plotting a histogram of the 'Price' column in the 'orders_df' DataFrame

# Set Seaborn theme for better aesthetics sns.set(style='darkgrid') # Plotting a histogram of the 'Price' column in the 'orders_df' DataFrame # Using 20 bins for the histogram and setting the title of the plot to 'Price' # This also adds axis labels and adjusts fontsize for better readability plt.figure(figsize=(10, 6)) hist_plot = sns.histplot(orders_df['Price'], bins=20, kde=False, color='skyblue') hist_plot.set_title('Price Distribution', fontsize=16, weight='bold') hist_plot.set_xlabel(

Price Distribution.

Conclusion

This guide, Part I, has introduced the fundamental techniques for utilizing Python's powerful Pandas and Seaborn libraries to perform practical sales data analysis, laying the groundwork from basic to advanced levels. In the next segment, we will delve deeper into selecting and filtering data, merging DataFrames, calculating total revenue by product, and much more.

By following these steps, you will be equipped to transform your raw data into insightful visualizations and analyses that enable data-driven decision-making. Continue practicing with real datasets to further hone your skills and solidify your understanding.

Feel free to reply to this newsletter with any questions or topics you'd like us to cover in the future.

If you liked this newsletter, don't forget to subscribe to receive regular updates. Share with your friends and colleagues interested in Python and let's grow together in our community of programmers!

Remember, the key to mastery is practice and persistence. Happy coding! Until the next edition, keep programming! 👨💻

InfinitePy Newsletter - Your source for Python learning and inspiration.